BACKGROUND

Overview

/ My Role

- Conducted design research for a spatial navigation solution, developing storylines and user flows.

- Studied the visualization of geographic data to inform design decisions for a 3D map interface.

- Created a pipeline for multi-character virtual meetings, including the design and visualization of virtual avatars.

- Designed CID (Cockpit Information Display) screens for AI-assisted driving experience scenarios, creating high-fidelity prototypes in Figma to visualize intuitive, user-centered interfaces that enhance driver interaction with AI features.

- Developed an AR Glasses application in Unity, integrating spatial navigation and virtual meeting features.

- Worked extensively with VFX graph (Unity), Niagara (Unreal Engine), and Particle Systems (Blender) as well as built custom shaders to prototype novel effects that translate across multiple platforms

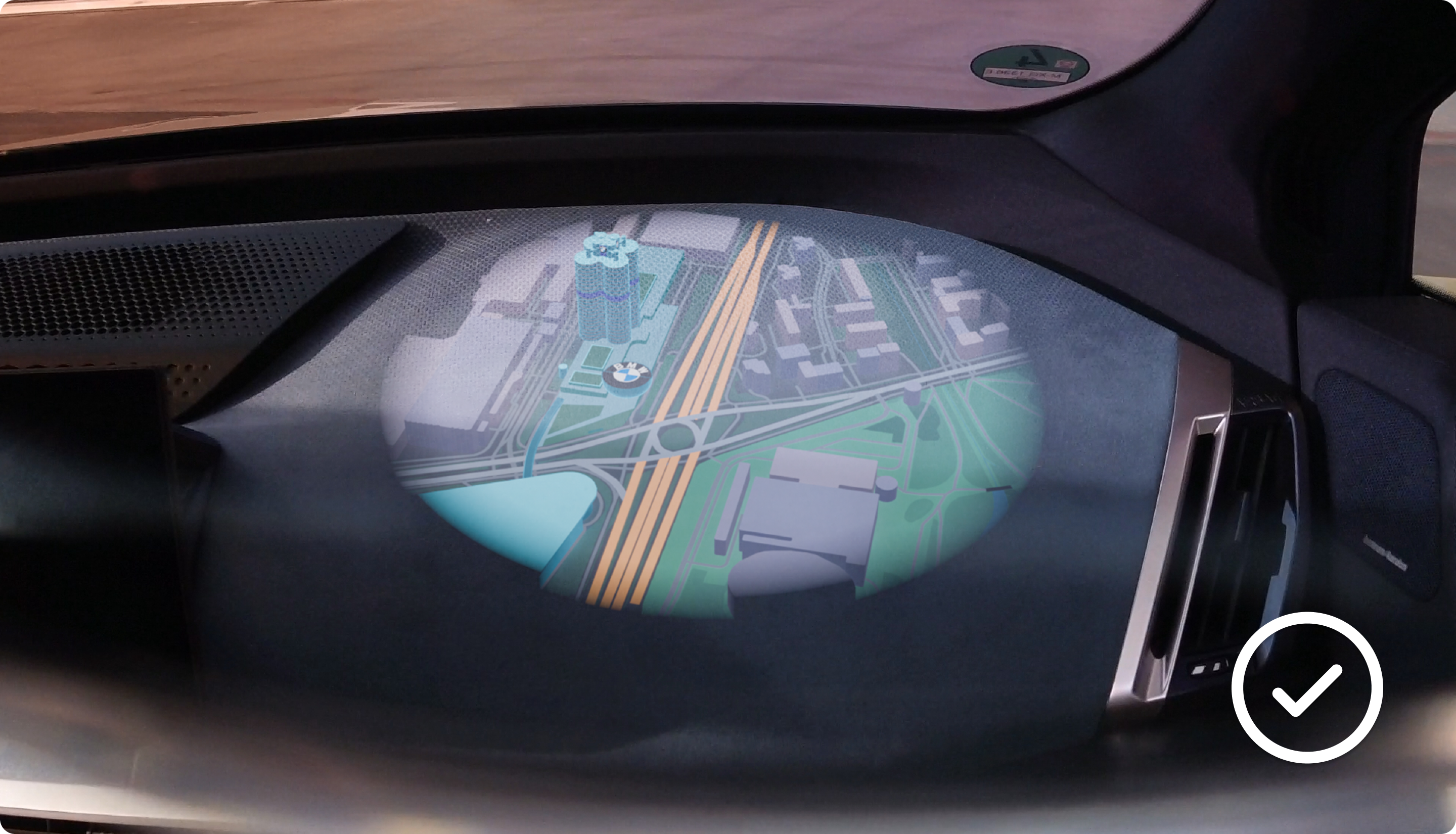

/ In-glasses recordings

RESEARCH

Beyond Windshield:

How AR works for in-car experience?

How AR works for in-car experience?

History of Augmented Reality for vehicles

BMW has been a pioneer in integrating augmented reality into the driving experience.

In 2003, it introduced the Head-Up Display (HUD) in the 5 Series, projecting essential driving information onto the windshield. By 2015, the MINI Augmented Vision concept showcased AR eyewear designed to enhance passenger experience with real-time projections.

In 2003, it introduced the Head-Up Display (HUD) in the 5 Series, projecting essential driving information onto the windshield. By 2015, the MINI Augmented Vision concept showcased AR eyewear designed to enhance passenger experience with real-time projections.

Using Augmented Reality in 2024

With technology advancing rapidly by 2023, our team of interns aims to build on this legacy. Analyzing the latest industry developments, we seek to create a more immersive and versatile in-car experience, setting new standards for digital interaction in mobility.

.gif)

A thought that sparked the journey:

How might we use augmented reality to reimagine what’s possible inside the car?

What if......

Virtual content can be placed in the real - world environment?

Virtual content can be placed in the real - world environment?

Our technology enables the integration of world-space elements into the in-car experience, allowing us to design beyond traditional screens and interfaces. By leveraging spatial UI, advanced real-time environmental awareness, and accurate spatial perception, we enhance passenger interactions with timely, contextual information that responds dynamically to their surroundings.

In this way, AR Glasses can do more

- Large movable field of view

- Great extension to a regular head-up display

- Entertainment for the passenger (Immersive experiences, Enhanced gaming, 3D videos)

- Improve safety for the driver (Display driving-relevant content where you need itKeep eyes on the road)

Advantages of BMW AR Glasses over in-car displays

Display Content where you want

AR glasses have a huge field of view compared to in-car displays. Content can be placed anywhere in the environment.

Personalized content for everyone

Customized content for everyone in the car, transforming every ride into a unique experience

Immersive experience

Virtual elements blend seamlessly with the real world to create fully immersive experiences.

How might we improve driving experience with AR Glasses?

- How might AR can help to enhance safety?

- How might AR can help to enhance driving autonomy?

- How might we overcome the constraints of AR in in-car environment?

Competitive Analysis: AR Applications in the Automotive Industry by 2023

By 2023, several automotive brands and tech companies have begun integrating AR glasses into the in-car experience. Brands like Rokid and Ideal focused on entertainment, enabling users to project media and games into AR headsets and control them via gesture or mobile phone.

Geely, in partnership with Meizu, took a system-level approach by connecting the Flyme OS with vehicles, smartphones, and AR glasses to create a seamless information and media ecosystem.

Meanwhile, Audi and Magic Leap showcased a high-end concept at CES 2023, demonstrating immersive MR navigation and spatial control using Magic Leap 2. These cases reveal a growing trend of leveraging AR for in-car productivity, immersion, and intelligent integration—yet challenges remain in consistency, context awareness, and user adoption across diverse environments.

Geely, in partnership with Meizu, took a system-level approach by connecting the Flyme OS with vehicles, smartphones, and AR glasses to create a seamless information and media ecosystem.

Meanwhile, Audi and Magic Leap showcased a high-end concept at CES 2023, demonstrating immersive MR navigation and spatial control using Magic Leap 2. These cases reveal a growing trend of leveraging AR for in-car productivity, immersion, and intelligent integration—yet challenges remain in consistency, context awareness, and user adoption across diverse environments.

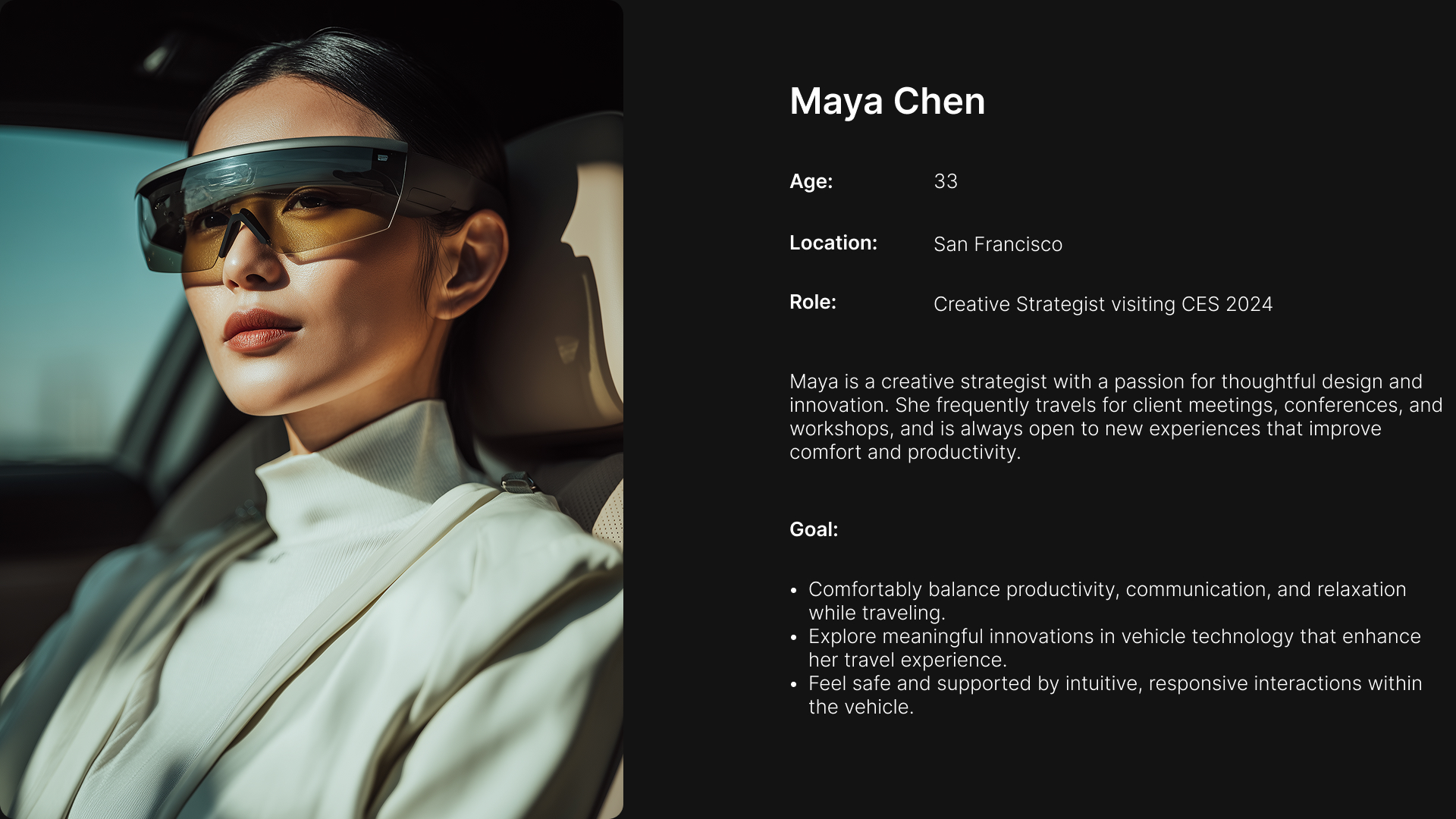

Persona Context: CES in Las Vegas

This project was designed to show case in CES 2024 in Las Vegas, prompting us to tailor an experience flow for future-focused travelers—professionals who expect comfort, connectivity, and innovation on the move. This context shaped our priorities toward immersive, responsive in-car AR interactions.

Design

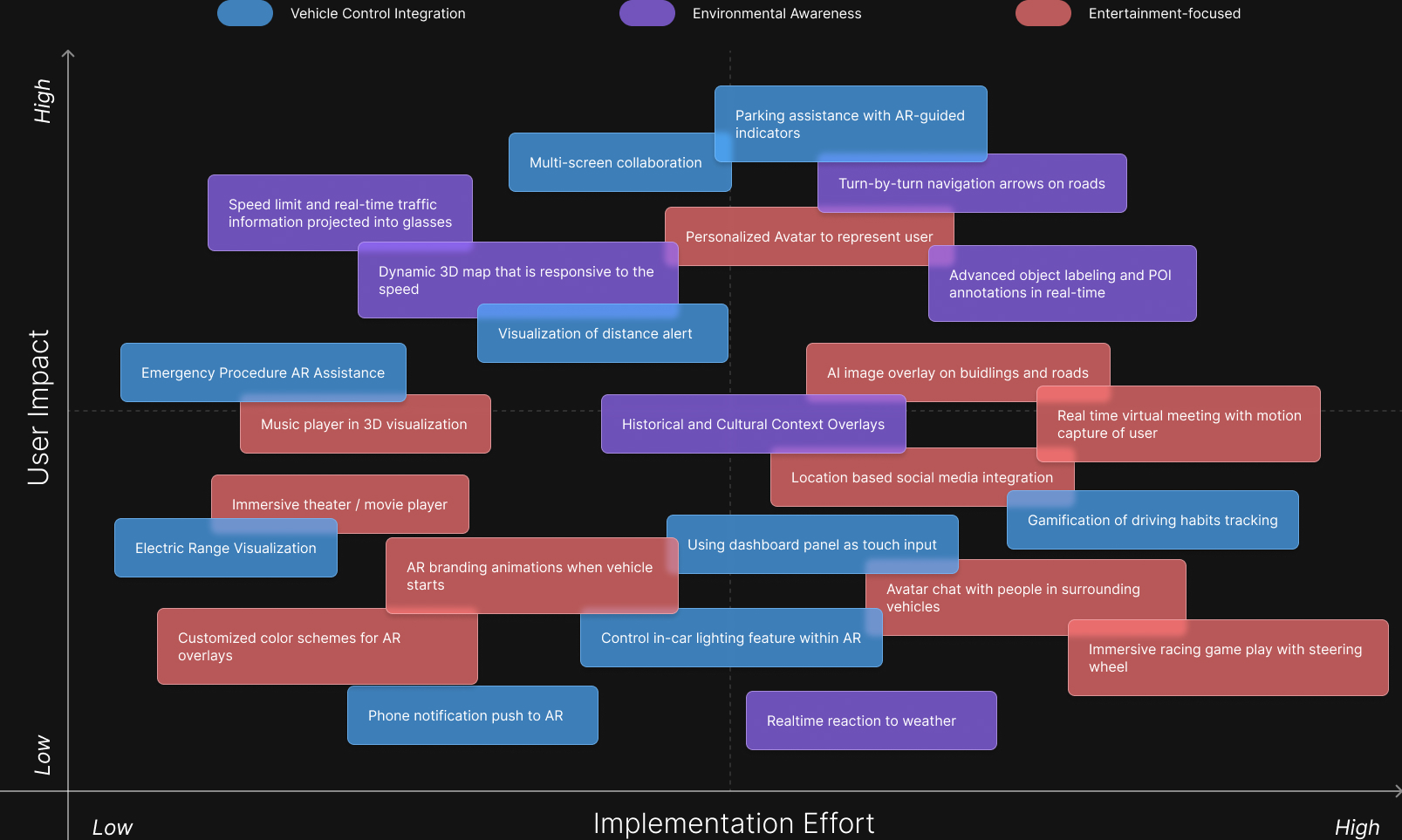

What functions?

This impact-effort matrix helped us evaluate and prioritize ideas from our brainstorming. Each idea was mapped based on user impact and ease of implementation, and grouped into three categories: Vehicle Control Integration, Environmental Awareness, and Entertainment-focused. It guided our team in identifying high-value, feasible concepts to focus on first.

Our main outstanding AR - function use case

Spatial Navigation

- AR glasses provide real-time, on-road navigation overlays, ensuring drivers keep their eyes on the road.

- Key route guidance, lane change prompts, and turn-by-turn directions are seamlessly integrated into the driver's field of view.

- Key route guidance, lane change prompts, and turn-by-turn directions are seamlessly integrated into the driver's field of view.

Signs & Hazard Warnings

- AR-enhanced hazard detection alerts drivers about pedestrians, cyclists, and unexpected obstacles.

- Real-time traffic sign recognition displays speed limits, stop signs, and warnings directly in the driver’s line of sight.

- Adaptive alerts for potential collisions, blind-spot monitoring, and lane departure warnings.

- Real-time traffic sign recognition displays speed limits, stop signs, and warnings directly in the driver’s line of sight.

- Adaptive alerts for potential collisions, blind-spot monitoring, and lane departure warnings.

Parking

- Visualized parking distance alerts helps drivers park precisely with augmented parking guidelines.

- 360-degree AR overlays enhance spatial awareness by integrating vehicle cameras and sensors.

- 360-degree AR overlays enhance spatial awareness by integrating vehicle cameras and sensors.

Entertainment

- AR glasses offer immersive media experiences, allowing passengers to watch movies, play AR-enhanced games, or browse interactive content.

- Multi-screen capability enables personalized entertainment without interfering with the driver’s focus.

- Multi-screen capability enables personalized entertainment without interfering with the driver’s focus.

Image resource: https://wayray.com/deep-reality-display

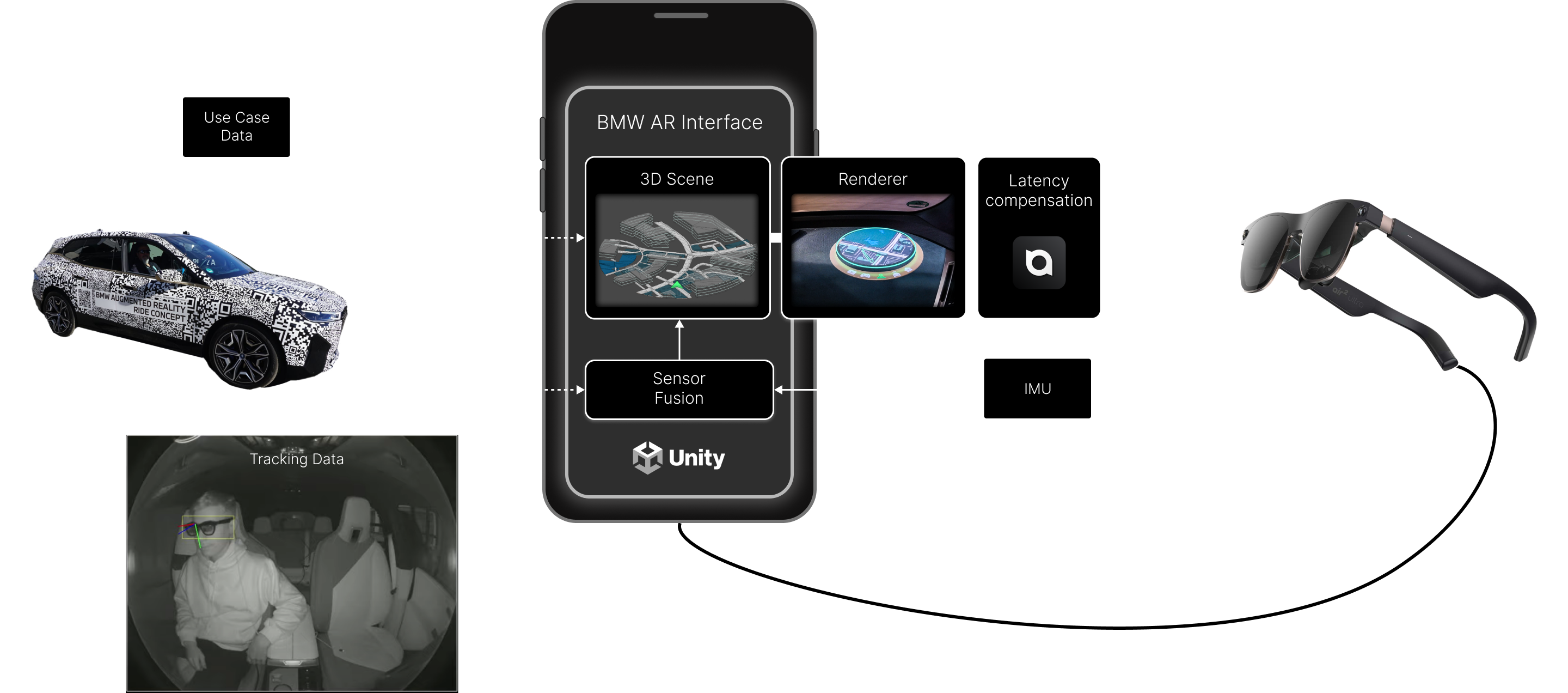

BMW + XReal: AR Platform

To support our concept, we developed a real-time AR pipeline integrating vehicle data, sensor fusion, and rendering. Content is processed through a Unity-based interface and streamed to the XReal glasses, enabling precise spatial overlays with low latency.

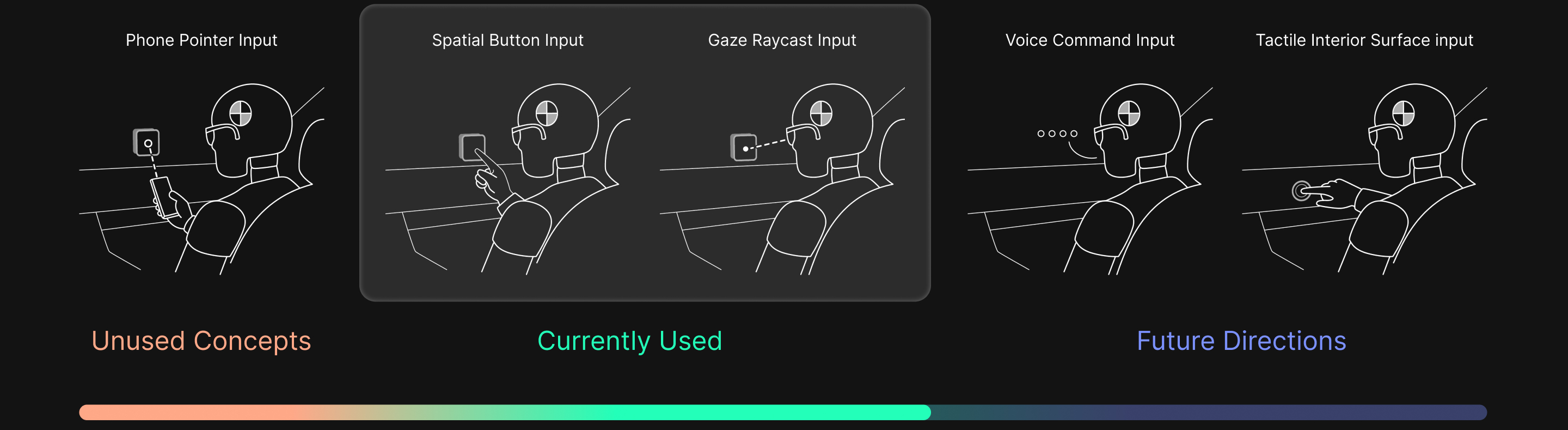

Input method exploration

From phone pointers to spatial buttons, we tested a variety of input methods. Some were ruled out early, while others—like gaze and gesture—proved effective. Looking ahead, we see strong potential in more ambient, low-effort input methods—such as voice control and touch-sensitive fabric panels—tailored to the dynamic in-car context.

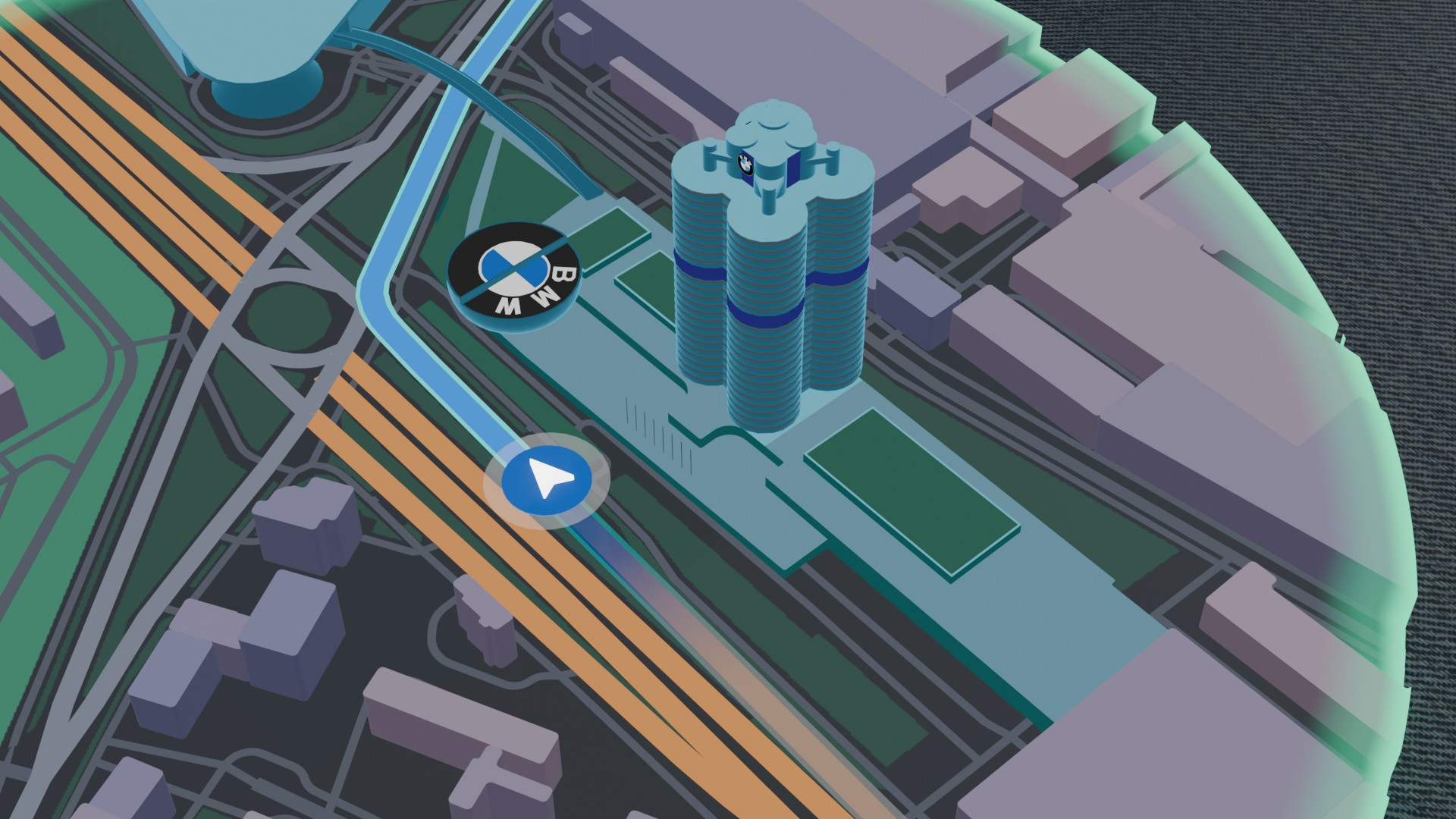

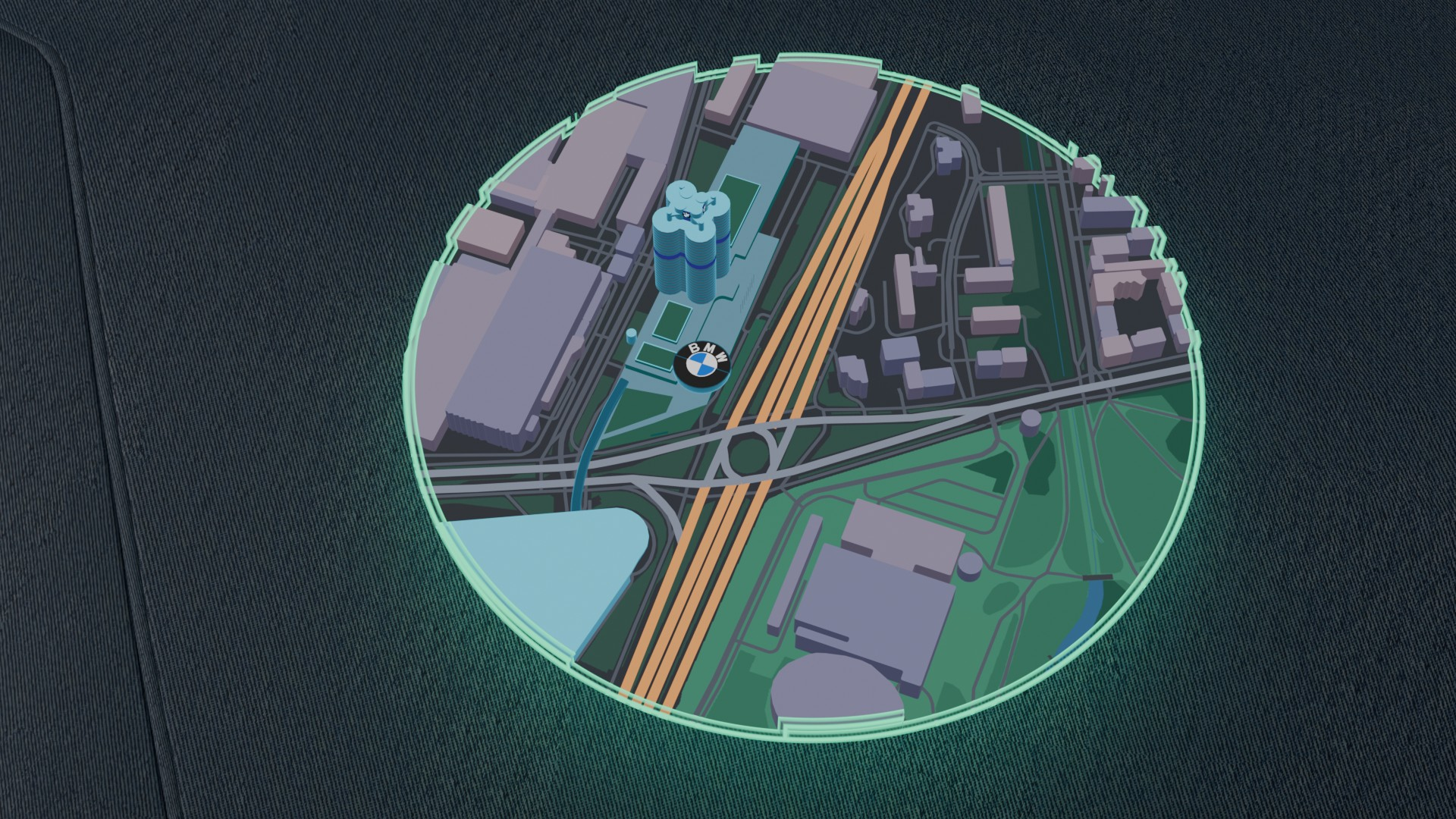

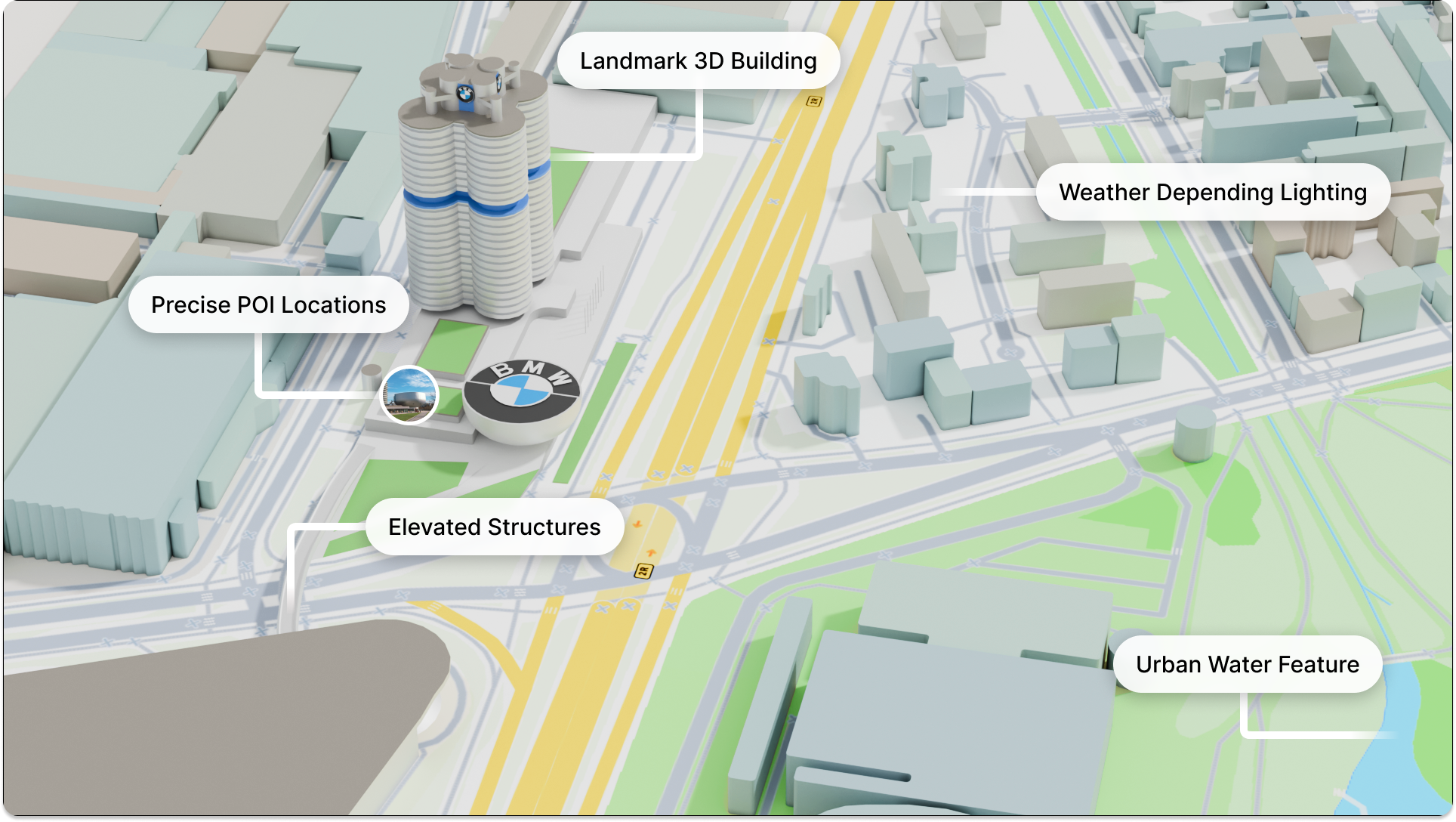

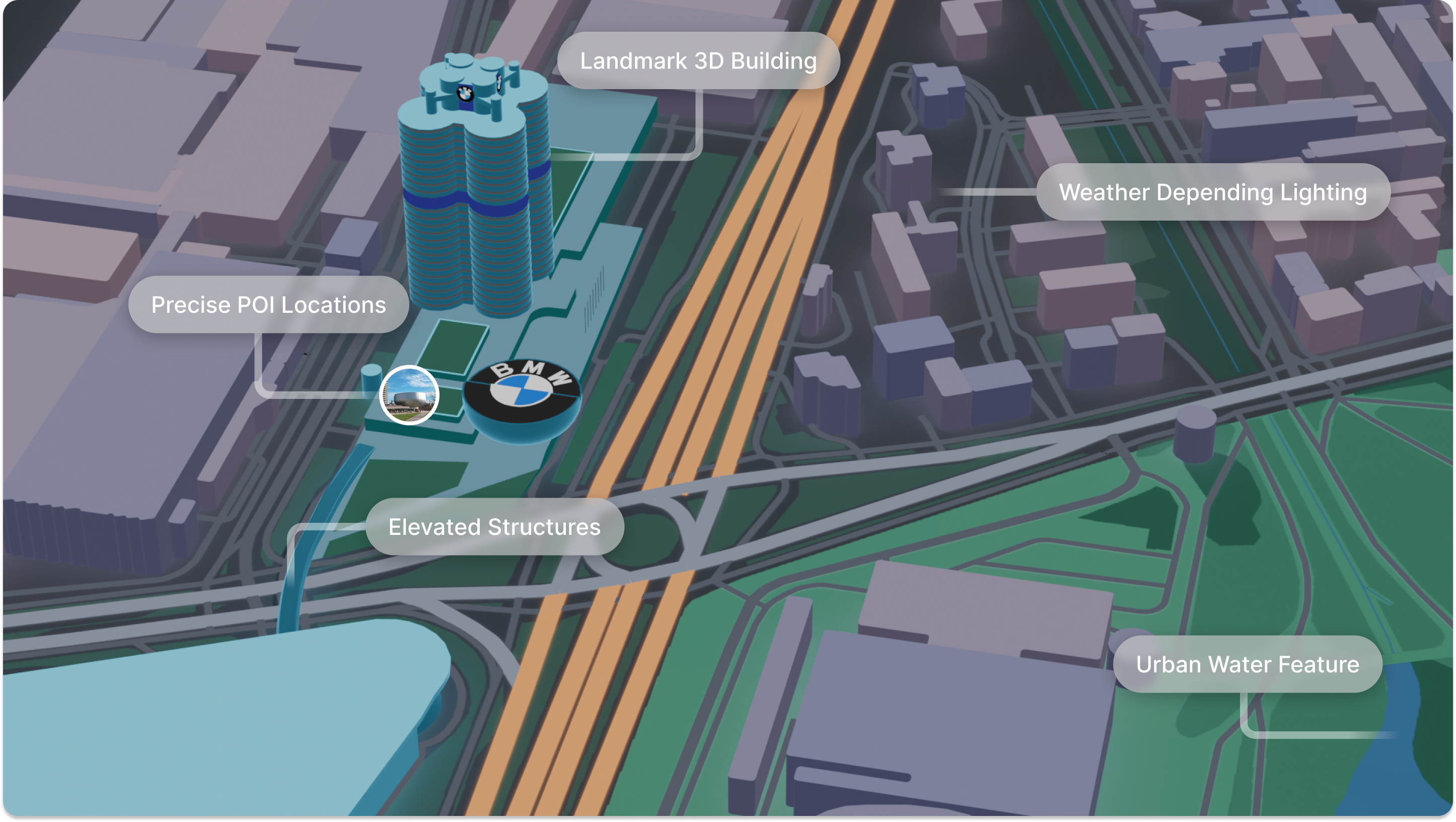

Map System: Day & Night Visualization

To evaluate the legibility and spatial clarity of our AR navigation elements, I built a procedural visualization tool using OpenStreetMap data, enabling real-time previews within Blender. This allowed us to test design decisions against realistic environments, assessing how our UI would perform under motion and through the lens of transparent AR displays.

While a day mode was initially explored, it posed significant readability issues under bright lighting due to the see-through nature of AR glasses. Additionally, a darker visual system better adapts to the diverse range of interior materials and finishes across vehicles. We therefore shifted our focus toward optimizing for low-light conditions.

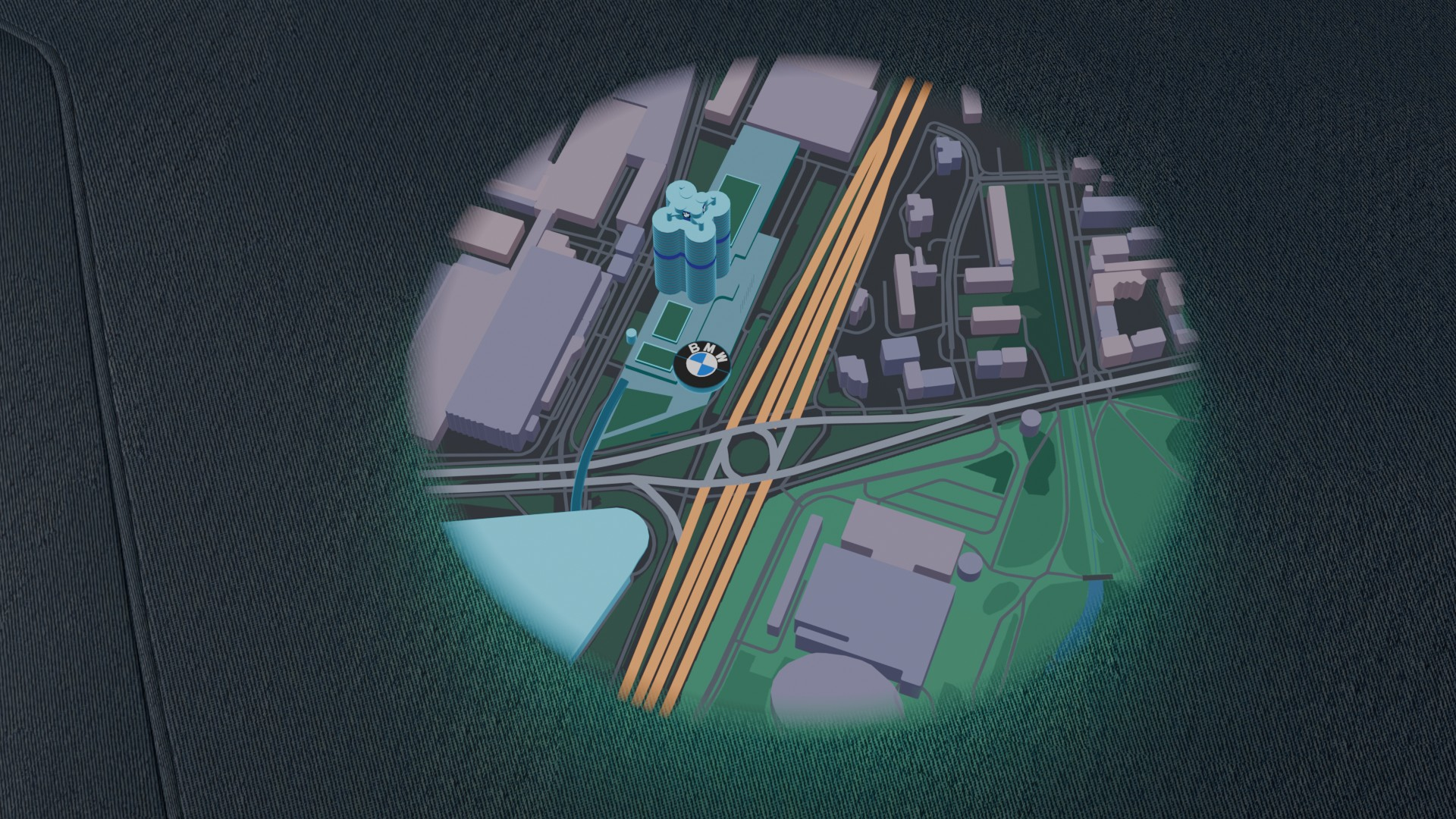

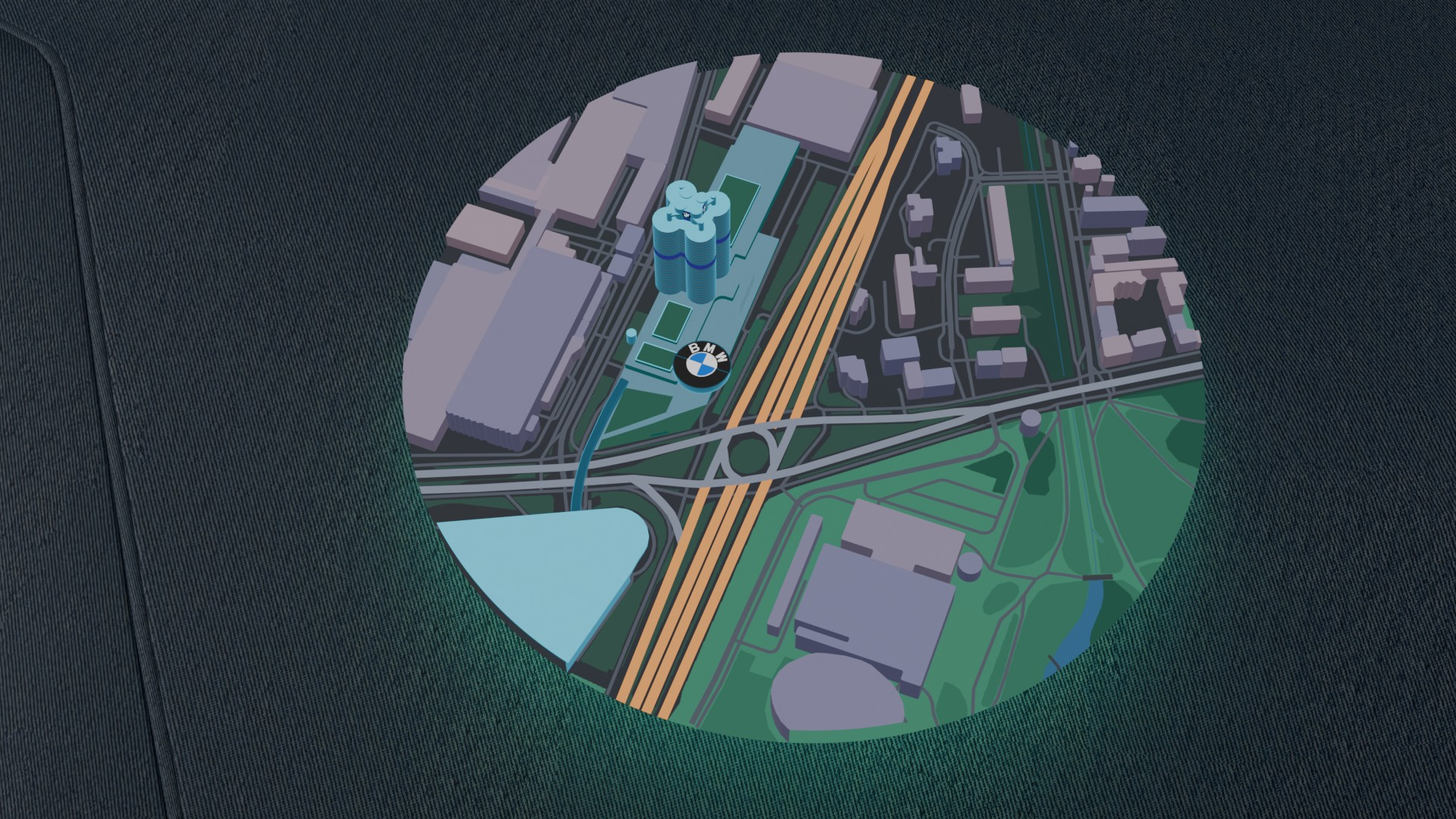

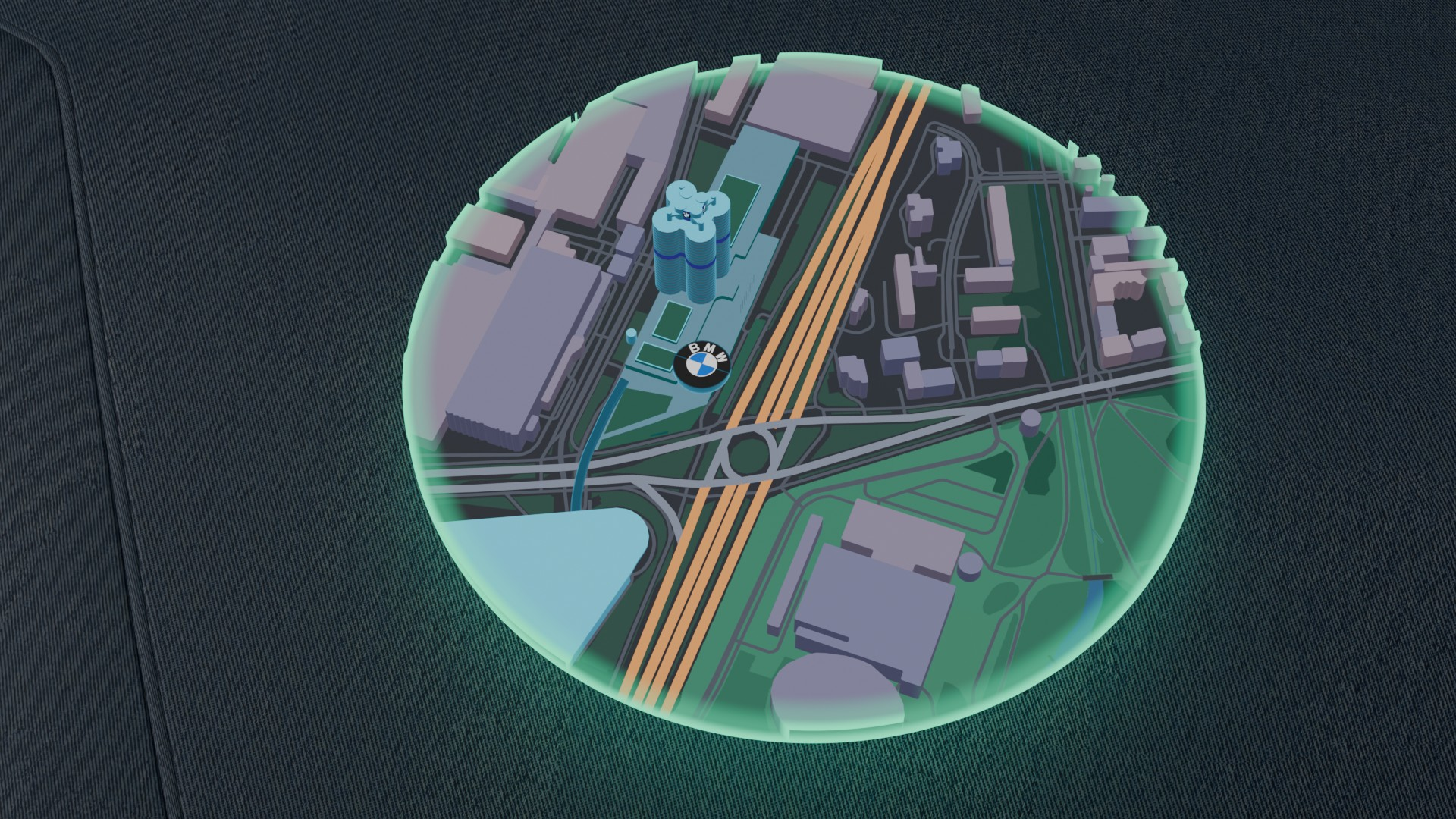

Map Periphery

Given the limited field of view in current AR hardware, I explored four distinct edge treatments to guide peripheral awareness and improve spatial continuity. These designs were tested to evaluate which approach best balanced subtlety with navigational clarity.

Spatial UI

Procedural LOD for Spatial Hierarchy

To enrich the 3D visual experience and support spatial understanding, I designed three procedural LOD models with varying levels of geometric detail. Each version was tested to determine how information density affects clarity, immersion, and performance in AR navigation.

Final Map Design